Johanna Wald* Helisa Dhamo* Nassir Navab Federico Tombari

Technical University of Munich Google * Authors contributed equally.

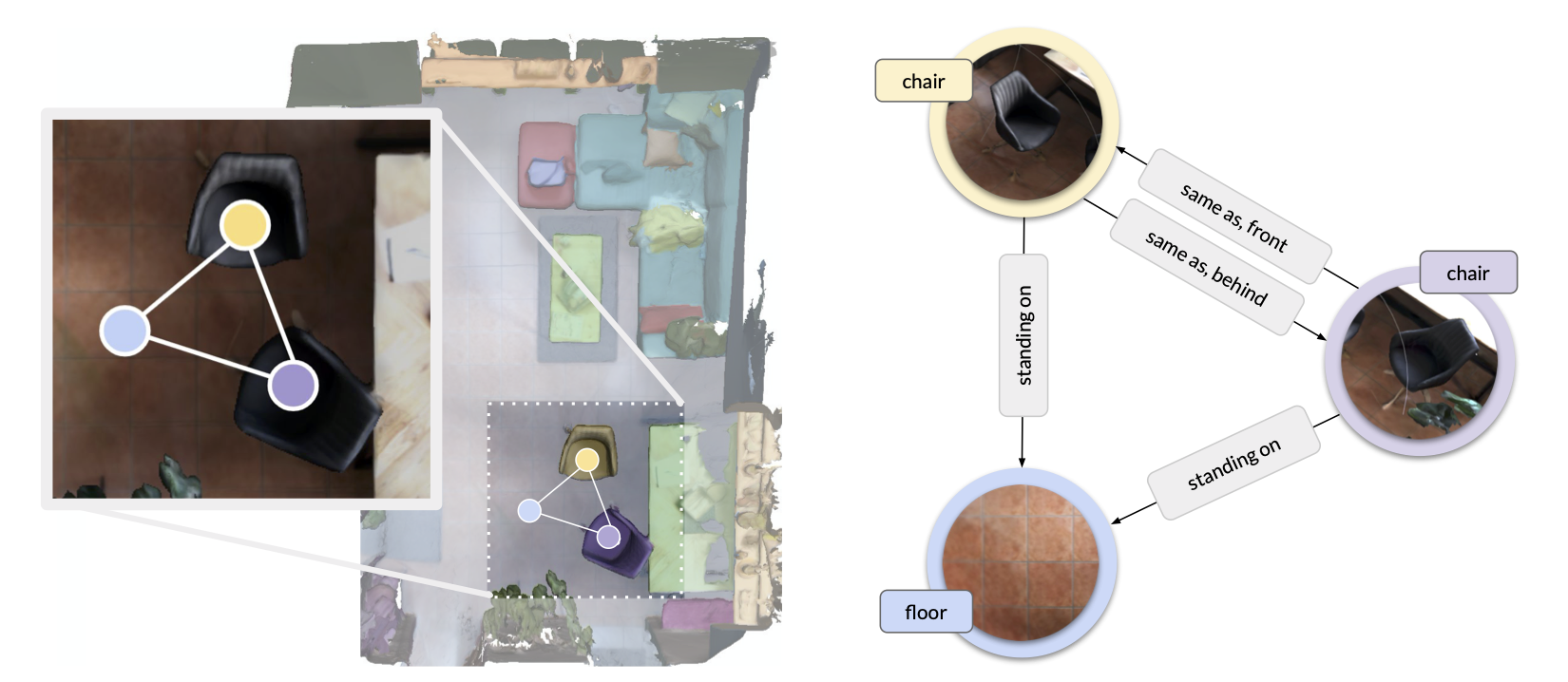

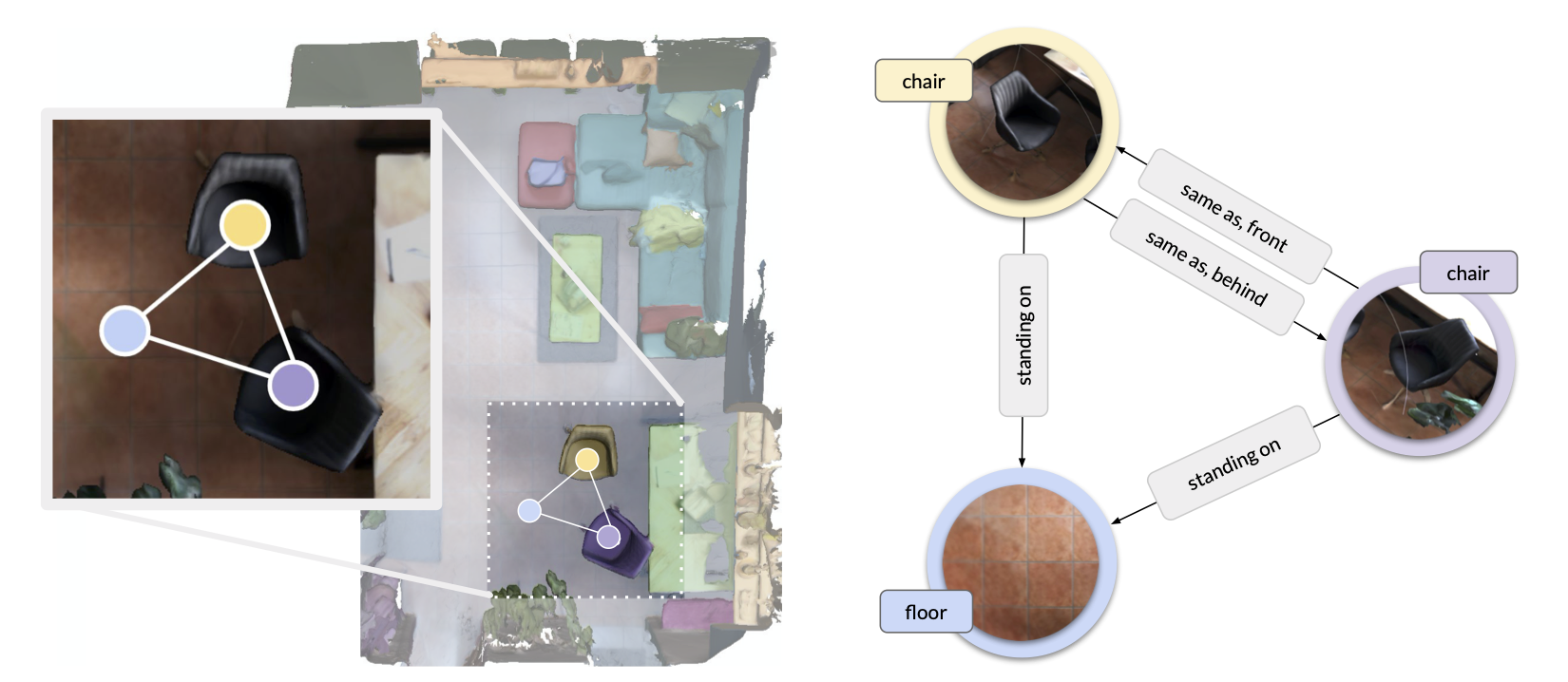

In our work we focus on scene graphs, a data structure that organizes the entities of a scene in a graph, where objects are nodes and their relationships modeled as edges. We leverage inference on scene graphs as a way to carry out 3D scene understanding, mapping objects and their relationships. In particular, we propose a learned method that regresses a scene graph from the point cloud of a scene. Our novel architecture is based on PointNet and Graph Convolutional Networks (GCN). In addition, we introduce 3DSSG, a semi-automatically generated dataset, that contains semantically rich scene graphs of 3D scenes. We show the application of our method in a domain-agnostic retrieval task, where graphs serve as an intermediate representation for 3D-3D and 2D-3D matching.

@inproceedings{3DSSG2020,

title={Learning 3D Semantic Scene Graphs from 3D Indoor Reconstructions},

author={Wald, Johanna and Dhamo, Helisa and Navab, Nassir and Tombari, Federico},

booktitle={Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2020}

}